Posts

- Our repository is huge. It's about 380M and so it takes a long time to push to Heroku for the first time. What's worse, is that if there's an error during the first deployment, Heroku doesn't cache the git repository, and so you need to upload it again (and again). I stumbled across a way to push the code without triggering a build, which saved me heaps of time later on as I fixed other build errors.

-

libarchive isn't available on Heroku. We use the ffi-libarchive gem as part of our trace processing, but the underlying library is missing and this stops the build process. I worked around it by removing the gem, but a better approach would involve adding Heroku's apt builder image.

-

Missing settings.local.yml file. There's a bug about this already, and we've added a workaround in all of our install notes, travis configs and so on, but it rears its head again here.

-

Production compilation of i18n-js assets. In our Configuration Guide we mention the need to compile the translation files for the javascript-based internationalisation, but it's not clear to me how to do run that stage at the right time on Heroku. It needs to be done either before, or as part of, the assets:precompile that Heroku runs automatically. As a workaround, I just committed the output to the git repo before pushing to Heroku.

-

No ActiveStorage configuration file. This is the nexus of configuration files, twelve-factor, and deployment-agnostic development. I could write a whole essay on this but simply committing

config/storage.ymlwas a good enough workaround for today. -

Needs a Procfile. Heroku introduced the concept of Procfiles, which lists what needs to run to make the whole app work. Here's a Procfile that works for us:

web: bundle exec rails server -p $PORT worker: bundle exec rake jobs:workThis tells Heroku that we want a webserver, but that we also need a worker process to import traces, and send emails asynchronously. Heroku then runs both processes automatically, along with detecting and configuring a database for us automatically too.

-

No ActiveStorage for traces. We have our trace import system hardcoded to use local file paths, but on Heroku those are ephemeral. In future we'll move trace importing to using ActiveStorage, but there's a couple of blockers on that for now.

Things I've learned maintaining OpenStreetMap (LRUG presentation)

On the 10th June 2024 I gave a presentation to LRUG, the London Ruby Users Group, about being a software maintainer for the OpenStreetMap website codebase. The description of the talk was:

Maintaining one of the world’s largest non-commercial websites, OpenStreetMap, is a unique challenge. We’re a small, volunteer-based development team, not professional software developers. I will illustrate some of these challenges with a mixture of technical and organisational tips, tricks and recommendations, that you might find useful for your own teams and projects too.

Here’s a video that the team at LRUG kindly made (32 mins) which has the audio and the slides, and below that you’ll find the transcript.

Transcript

Hi everyone, my name is Andy. Every Wednesday is my volunteering day, it’s when I step away from normal work and I do some volunteer development work. So in the mornings I head to my desk, I open up the OpenStreetMap website github repo page and I have a quick check to see if there’s any pull requests that need my review.

Yeah there’s a few, there’s a few more, in fact there’s loads. At the moment we have 137 open pull requests, more than 500 open issues and you might be thinking the same as me which is where on earth do I even start!

So I’m Andy Allan, I’m one of the two volunteer maintainers for the OpenStreetMap website project.

Actually the other maintainer, Tom Hughes, has come along this evening so I need to be very careful about what I say as he’s an expert on things. When I’m not doing volunteer development work I’m the founder of a company called Thunderforest which builds commercial services based on OpenStreetMap data. This evening my talk is split into three parts, I’m going to give you background about OpenStreetMap, and also some background about the OpenStreetMap website project. I’m going to look at some of the challenges that we face, the organisational approaches that we use to deal with that, and then finally some of the technical implementation details which is great to be in front of a technical audience for a change so I actually get to put some code on the screen today.

So let’s start with some background.

OpenStreetMap, if you haven’t heard about it already, is a global volunteer open data mapping project, where we go out and we map all the details about the whole world from scratch.

The data gets used by hundreds of different websites and applications, businesses small like mine or large like Amazon, Apple, Facebook, they all incorporate OpenStreetMap data into their various mapping products.

Lots of people think of it as either a technical project or a data project but in my mind it’s actually a community project. It’s building the thousands of volunteer mappers who are willing to spend their time going out and mapping the world, and on a typical month we have more than 45,000 volunteer mappers who will be walking the streets adding information about their local areas into the system.

The part of this project that I’m talking about tonight is the Ruby on Rails app that powers the core website. So this is the initial commit by Steve Coast, the founder of OpenStreetMap back in 2006 so those of you with very long memories will realise that this is a Rails 1.0 era project that’s been continually maintained for almost two decades. Like most Rails projects, the split covers a few bases.

The first obviously is the website. We also have an XML and JSON API which allows third-party editors to edit OpenStreetMap and the project also contains the definitions for the core database of the project. It’s kind of hard for me to give an overview of such a large project in a small amount of time so here’s just a list of the models in the Rails app with some of the more interesting ones highlighted.

So as well as the OpenStreetMap data we have lots of community features, like we have user diaries, we can comment on changesets, we can block users, report, spam, all these kinds of things. If you’re familiar with the front page of OpenStreetMap, it’s worth me at this point mentioning that a lot of the stuff you see on the front page is not maintained by me and Tom, they are separate projects. So the rendered map images, the search, the routing engines, even the built-in editor and some of the other built-in tools are all separate projects, but the OpenStreetMap website is the one that pulls everything together.

So we have these user-facing separate projects that have some of the functionality of the site. We also have some behind-the-scenes external projects which are to do with speeding up the API, or distributing the data including publishing the data every minute. But like I say the OpenStreetMap website project ties everything together.

To give you an idea of the activity we’ve had 14,000 commits from over 200 individual people in the last 18 years. We’ve got a few thousand tests. Because it’s a global volunteer project it’s important that it’s translated so we have over 100 languages that the website is translated into.

I was shocked when I made that stat but it’s almost 2,000 different people have been involved in translating the website into these languages and we have just under 3,000 source strings in our en.yml file which leads to almost 200,000 translations and these are done by a separate project and automatically copied over into our repo for publication.

So the main challenge that I want to talk about tonight is that this is not developed in the same way as your typical commercial project. We don’t have a big team of people. We have two volunteer maintainers and a few volunteer developers. And for projects this size, this important, this heavily used, that’s quite different from your typical setup with teams of professional software developers, product managers, designers and so on in the background.

And our volunteer developers come along and make one of these pull requests.

It’s worth bearing in mind that there’s no candidate screening, there’s no technical interviews, no onboarding, no pair programming, no senior developers to point them in the right direction. It’s quite different. And because also they’re volunteers, regardless of technical ability, they also have their own priorities. They have limited availability of how many hours a week they’re likely to dedicate and this has implications for the kind of features that we can develop, and how we want to maintain the project. So I want to dig into this a little bit and see some of the techniques that we use in order to handle the fact that all of our development is done both by volunteers and by people of varying skill levels.

One of the key things I like to focus on is making the developer experience as robust as possible and that involves a lot of linters.

So most of you are familiar with RuboCop and its sibling gems. We also use strong migrations which can catch a migration that might block one of these super huge tables if it needs an exclusive block. We use ERBLint for linting the view files. We use Brakeman to do security testing. We use something that you can barely see on the screen called Annotate which is a nice little gem. It puts comments describing your database tables into the model files and it makes it super easy for people to get started to know what the model does, much more so than digging into the database.

And we put all these into CI. And for those of you who think of RuboCop or any of these linters as a kind of like strict teacher making sure your double quotes are not single quotes or that the spaces in your hashes are consistent, that’s not really how I like to think of it. I like to think of it more as a form of automated code review.

Those kind of syntax layout things are not as interesting as the cops which will teach you something. And the great thing about putting these in CI is that our developers will get instant context specific feedback on the code that they’ve just written. And to me that’s much more important than expecting somebody to have read a Rails book or been on a tutorial or read something general because this is about specifically what they’re focused on.

So for example a Capybara RuboCop thing that might tell you how to make it work with asynchronous methods or going back to the example about database migrations, it turns out loads of the database migrations are safe if you’re doing specific things on specific versions of Postgres. And not only do I not expect the developers to know this, I don’t know it either. So this is super useful for me when I’m doing code reviews to know that I’ve got the backup of all of the collective wisdom of all of these different tools.

The second thing that I like to do is to really concentrate on refactoring. And that’s because our developers tend not to be Ruby experts who are starting work on OpenStreetMap. They’re OpenStreetMap experts who are learning how to do Ruby and Rails by reading what’s here already and copying what we’ve done in the past.

And for an 18-year-old project there’s a lot of things in various corners of the code base which is not how we would do it anymore. So it’s important to refactor this first, otherwise we just get pull requests with - aargh, wouldn’t merge that if I was you.

And the third thing is that we work hard to make sure we follow Rails standards and conventions because we don’t have much internal documentation.

We don’t have much time to explain to people how things work and what they do. So we rely heavily on the fact that if we follow the Rails conventions then there are tutorials, there are the guides, there are YouTube videos which will also explain things. So we shy away from doing our own curious things and try and stick to the main ones.

So for example we got rid of our own file handling to use Active Storage. We got rid of an external job queue in order to use Active Job and things like that. Whenever these new things appear in Rails framework we try and take advantage of them straight away.

When it comes to the technical matters we also need to approach them with the view that again our developers are not Rails experts. And the first topic I want to talk about is output safety. Whenever I’m testing stuff I like to put some HTML into my username because it makes it really obvious where we’ve forgotten to do it.

And when I first did this these horizontal rules started appearing all over the place which let me know we weren’t doing the output safety correctly. If you’re not sure why a horizontal rule isn’t important just imagine I’ve written a script tag instead. That opens you up to all kinds of cross-site scripting attacks.

A bit of history in Rails 3. By default nothing was escaped. So if you just put something into your view template it would show it straight away. You needed to figure out every time you were using anything that was user controlled like their own display name you had to escape it with the h function.

And obviously that leads to loads of places where that gets missed. And so in Rails 4 they switched it around and they created a thing called safe buffers, which means everything is escaped by default. If you have a helper that outputs HTML then it ends up double escaped.

And to help with this migration they created two little get out of jail free cards. One of which was calling html_safe on a string and then that would be escaped again, or the other one is calling raw. Now like I said before because our developers are often inexperienced and these are quite powerful tools, it leads to situations where they can easily make mistakes - especially if they’re copying what they see in one place and using it in another.

So I took a lot of time to go through all of our legacy code and refactor it to get rid of these html_safe safe and raws. And for the most part this was straightforward. The Rails translation system lets you mark translations as “we expect to see HTML in here” and therefore you don’t need to escape them you can get rid of that raw tag.

You can get rid of html_safe from your helpers by using certain things like there’s a safe_join which is aware of how SafeBuffers work and all the Rails ActionView tag helpers do that too.

The final challenge and the hardest one to deal with was flash messages because ActionDispatch is not, the flash system is not aware of SafeBuffers. It only takes Strings, Arrays or Hashes, and so if you have a flash message which has some HTML in it you need to come up with something - you can’t just commit it to flash because it will only take a string and it will think that it’s an unescaped string.

So if you go on to Stack Overflow or anywhere and say how do I put HTML in a flash message they all give something along these lines which is just put it as a string and then when it comes to the view call html_safe on it. Jobs a good’un.

But the problem with this is that html_safe does not escape anything. It’s a declaration by the developer that what you’re handling is definitely HTML safe. There is no possible code injection in this and in this case that’s fine because it’s a hard-coded string. There’s no user information there.

But we had a problem where one of our flashes said that we were blocking users so whenever a moderator blocked a user… Well one day one of the users had some script tags in their name, and the scripts ran. So despite this being what everybody says to do, no don’t do that.

We need another way of doing it which means we are using SafeBuffers and because we can’t do them from the current action to the future action, we can’t pass the safe buffers across, we need to think about using the SafeBuffer approach after the event.

So this is a method I found somewhere else I want to share with you guys which is to make a partial with whatever HTML you want in it. So we have a couple paragraph tags here. We take advantage of the fact that Flash can take hashes and say which partial we want, any locals that we want to pass it to, we can store that hash in the Flash and then a small helper at the end which detects if we are using a hash, calls that ActionView render on that hash and it will do exactly what you want. If you’re just passing strings that’s fine, just use the Flash message as is.

I think this is a really nice technique and I have yet to see it documented anywhere. I found it by digging through some MySociety code base, when they’d had the same problem and I thought - yeah, that works.

Internationalization, as I said before, is quite important for our project and one of the interesting quirks we came across recently actually shows some of the limitations of the Rails internationalization framework which I wasn’t previously aware of so I thought I could share that with you tonight as well.

Many of you have seen this before which is you can use, you can leverage Rails in order to choose what translation you want depending on how many things you have.

So if you have one cat, it will use this translation name that’s a singular. If you have multiple in English, then you can… it’s all the same… ‘cats’.

This is different in different languages. I lived in Poland a couple of years ago and so I learned in Poland they have three different plural forms. You have one for one, you can see that two, three and four have the same pluralization but five changes to a different pluralization. And this can be handled by the translation system, that’s not a problem. It is worth noticing though that not only two “koty” but also 22, 33, these also count as few in Polish.

So it’s more like the number behaves like few. Most things that end in a two, three or four count as few, most things that don’t count as many. So it’s not strictly one, few, many.

And this is important for all the situations where you want some special text for when you have nothing or something. So we have it for comments like there is no comment yet or I need a cat. When we were doing this, I have there are no comments as a separate translation until one day one of our translators popped up and said I can’t translate this into Latvian and that’s because this is not zero as in nothing, this is zero as in numbers that behave like a zero and in Latvian that’s zero but it’s also 10, 20, 30, 100, 140 and so on.

So they couldn’t have a translation which worked for both the special case of zero and also for 10, 15 and so on. And so we dug into this and in the Ruby internationalization framework, they have the standard six translation keys that work with the system - zero, one, two, few, many and other. And we saw most of these already.

But the more comprehensive approach is to have specifically zero and specifically one as translation keys and Ruby internationalization just treats these as aliases of zero and one and that blows up the whole approach to translating in Latvian. So if you want your translations to work, if you want a special message for the zero case, then you have to have a separate translation and then you can do the rest for counts.

Sounds pretty obscure, right? But we don’t want to make the same problem again and we don’t expect any of our developers to know this or understand it. So we made a test and this runs during commits and it checks the en.yml file for any translation keys that start with zero and then it can warn the developer, no, don’t do this because it doesn’t work and then we don’t have to think about it every time we’re emerging pull requests.

Our developers will only be told about this when they come across it. It’s not like something they have to read in the documentation and so again that kind of targeted feedback is super interesting.

The final thing is going to combine those two topics of output safety and internationalization.

When I was looking at this one day and I thought that looks fair enough. We’re using the safe buffer-based approach. We’ve got a translation that we’re expecting html because we want that name to be the link but this is safe buffer aware so it’s doing the escaping.

Everything looks good. It’s hard to understand is there an output safety problem here and then I was looking at a translation. It wasn’t Polish but I was looking at that and I thought wait a minute if we’re permitting html what happens if it’s the translator who puts the dodgy html in there.

Because we have almost 2,000 translators and 200,000 translations. We’re not inspecting these manually. They’re just being automatically brought over.

So I had a quick check and it turned out- it was fine. Nobody else had figured this out before me.

But it did mean that I wanted to make sure that there was no dodgy html in any of the translations and that there never would be. And the easiest way to do that is to make sure there’s no html in any of them at all.

And that was an opportunity to do a lot of refactoring and pull out any of these translation strings where we have html in them to break them down into their parts, have the translations with no html and then use the views to build it up.

And to a certain extent this is a better way of doing it anyway. You’ll find these creep into projects but it’s a separation of concerns issue. You don’t want to have to go hunting in your translations to change paragraph tags or things like that. Of course we added a test.

So this test does two things. One it warns our developers if they ever think “oh I don’t know how to do this properly I’m just going to put a bold tag or a link directly into the translation”. It will fail and it’ll do that.

It’ll also give us a warning if any of our translators are trying something they shouldn’t do, because the build will fail when all the translations are imported.

So that’s about it for me. There was a view on things that we do in our project to deal with our main challenges.

But this is where you guys come in.

I would like your help. Read our contributing, help review our PRs, make new PRs, think about other ways that you guys know about how we can improve the developer experience, other techniques that we could be using.

If you don’t fancy coding on your time off I totally understand that. We could still do with help with issue triage or join our enormous team of translators.

Here’s some LRUG special things because this is the most technical audience I’ve ever talked about this stuff.

We’re part way through replacing the JavaScript with Hotwire. If you’re a Hotwire expert please come and tell me how to do it more easily. Restful controller renaming, super important to make the code clean and to meet developer expectations but I really struggle to come up with good names for certain controllers and certain actions on controllers.

We currently use a C++ utility to speed up our XML and JSON APIs because we can’t get the performance we need out of the Ruby view system. If you include the same partial 10,000 times it takes dozens of seconds to run. If you know how to do XML, big XML, big JSON APIs at scale come and talk to me, or any of your more typical Railsy type things like we want to build a whole notification system, models, database things, it’s a lot. Your help would be very much welcome.

So that’s it for me. Thanks very much. Any questions?

Q [This person is asking about using fake streets (known as trap streets) to understand if people are stealing your map data]

Yeah we do.

So for the benefit of the recording this is about trap streets and map data to see if people are violating the copyright. We deliberately don’t do anything wrong but you can still spot OpenStreetMap data quite easily because it’s never complete. So if you look at a map and it has some of the buildings mapped or some of the buildings mapped accurately and some of the buildings mapped not so accurately, you can easily compare that with OpenStreetMap data and then you know they’re using OpenStreetMap data.

And we can also go back through the history because we record the history of everything and publish the history of everything. We have services which will show you if they took the data six months ago or nine months ago you can do comparisons there as well.

Q [This person is asking which Rails version the OpenStreetMap app runs on]

It’s 7.1.

Yeah the latest version and we test on Ruby 3.0 and newer and that’s so that we have quite a wide range of supported Ruby versions so that a typical developer who might just have whatever the latest Ubuntu LTS is on their laptop can get started straight away. So we try and be as accommodating as possible with Ruby versions, Postgres versions, all that kind of stuff because these are the technical barriers that we don’t want to get in the way of people who might not be that technical to start with.

Q If I wanted to contribute is there a list of like we really want seasoned Ruby developer help on these issues? Like I know a lot of open source projects have this would be good for a first-time contributor type issues so that you want this would be good for a first-time experienced contributor. Is it easy for me who’s never looked at a good app before to identify those?

Yeah it’s really hard to get started with finding the right issues. We’ve tried the good first issue approach which is often not for super experienced people but it tends to attract people who are not actually that interested in OpenStreetMap or the development and more kind of just clicking somewhere and GitHub that points you to the repos. We get an awful lot of people saying could you assign the to me please and then we never hear from them.

The more major projects are not very well highlighted but I would look for the refactor tag on issues because these are generally things where I know there’s some work that needs to be done and it’s a kind of long-term thing it’s not not just you know two-line fix for a bug. But otherwise I would encourage you just to have a start having a look at things and something will pique your interest pretty quickly with 500 different things to work on and I’m sure one of them will be interesting.

Q [This person is asking two questions: 1 - How customised is the OpenStreetMap app vs standard rails? 2 - What is the hardest thing you’ve had to fix

Was the second one the hardest single thing or the hardest thing I had to fix? Well I’ll answer the second one first.

The good thing with the hardest things to fix is that Tom does all the hardest things and I do the easier ones. So when it comes to trying to get OAuth 1.0a to work happily with OAuth 2.0 at the same time it’s beyond me. Tom takes care of that.

The hardest thing that I had to work on was probably either one of those things like the flash messages took a lot of looking around.

Actually trying to import a data set which is published as a node package and pull that in. I eventually found Frozen Record which is a great way of having static models that are not database backed but in a way that still feels quite like Active Record. So again these… The hardest things are usually ones where it seems pretty trivial and then I spend an entire day searching for… “Come on there must be an easier way of doing this”.

And the first one was how much customization stuff. Well a lot less than there used to be, put it that way. I think there’s a few things where like controller naming or the routes - they’re not, it’s not heavily customized it’s just really basic and kind of like ideas that were popular back in Rails 1.0. So we have like a thousand routes which would just get this target and we’ve been slowly trying to make them into resourceful routing instead of just every path being individually handled.

That’s the least like in all the Rails app we’re doing. But apart from that most of the stuff in the app is crud things. Adding users, adding user blocks, this that and the other.

In the wider sense, one of the most interesting things that I haven’t covered, here one of the most custom things is to do with the minutely publishing of the data from the database. To do that consistently every 60 seconds with in-flight transactions and being able to handle the Postgres transactions in mid-flight whilst still publishing all the data.

That’s been something which has been super interesting and lots of different approaches over the last 20 years to keep that working as Postgres versions change.

Q [This person is asking how much upstream contribution OpenStreetMap has made to Rails]

No not big ones usually just small bug requests on things that are upstream that haven’t worked. So the multi-database handling stuff that’s gone into Rails 7 didn’t work for us up until the most recent version of Rails because nobody expects to have 20 years of migrations, and so connecting to multiple databases and old migrations and things that stuff wasn’t working.

I don’t know - Tom have we pushed anything else up?

Yeah we used to be heavily involved in the composite primary keys gem and then Rails now has composite primary keys and so that was great to step away from that. Tom had to do a lot of work upstream with that. I saw another hand a minute ago.

Q: [This person is asking how much data is in the OpenStreetMap database]

How much data is it? Tom, how much data is it?

Yeah, yeah, many terabytes! As far as we’re aware, it’s the largest open source dataset that’s using Postgres, because anything measured in terabytes is generally either just autogenerated data, or commercial. So there has been a few cases where we’ve had Postgres consultants who are interested in what we’re doing. And our lack of horizontal scalability, things like that, it’s still… big machine gets you much further than you might think.

Q [This person is asking about which postgresql extensions the OpenStreetMap database uses]

Yeah, curiously, despite being heavily map based we don’t use PostGIS which is the geospatial extension for Postgres. We don’t use that at all. Partly for historical reasons, because PostGIS was nowhere near as good as it is now, 20 years ago, partly to do with rounding errors, and not storing stuff in floating points which PostGIS likes to do. We have our way of storing coordinates which uses integers. Yeah, just straight Postgres.

[Tom mentions another reason for not using PostGIS - topologically aware data]

Oh yeah, and the other reason for not using PostGIS is that we have a topologically aware data model. And that doesn’t map neatly onto what are called OGC standards for data representation. This is like a whole other talk that I’d need to do.

Alright, I think we’ll leave it there. Thanks very much.

This post was posted on 19 October 2024 and tagged OpenStreetMap, developmentCan OpenStreetMap run on Heroku?

It's been a long-term goal of mine to see if we can get the OpenStreetMap Website and API running on Heroku.

So can we? In short - mostly, but it's still got some rough edges.

But why?

OSMF already has the website and API up and running, obviously, and it needs some pretty specialist hardware for the databases. There are no plans whatsoever to move all that to Heroku, so at first glance it seems rather pointless for me to investigate if it actually works. So why am I spending my time on this?

Firstly, it's useful for other people to have their own working copies of the website. They can deploy the same code, but use their copy to map historic features that aren't relevant in OpenStreetMap. Or they can use it to make collaborative fantasy maps, or maps for proposed local developments, or other projects that aren't suitable for putting in the main database.

Secondly, it's also useful for developers. We already have a system on the OSMF development server for testing branches, which allows non-developers to check how proposed features work, debug them and provide feedback on them. But if you have a branch to test you need to ask the sysadmins, and wait for them to deploy it for you, and you don't get full control over the instance if you want to debug it or to dive into the database.

But mainly, I'm doing this because Heroku is a useful yardstick. Even if you aren't interested in using Heroku itself, it's perhaps the best example of a modern Rails hosting environment. If we can make it easy to deploy on Heroku, we'll have made it easy to deploy in all manner of different deployment situations, whether that's in-house in a controlled corporate environment, or using cloud native builder images for Kubernetes, or something else. So I think it's worth using Heroku to find out where the kinks are in deploying our code, and then to iron those kinks out.

Some ironing is required

In no particular order, here are some of the problems I came across today:

So a few minor hiccups, but nothing particularly insurmountable. I was pleasantly surprised! While we work on resolving some of these problems, there's a whole load of things that already work.

How we got here

I would describe getting to this stage as a useful "side effect" of lots of previous work. The most recent was replacing the compiled database functions with SQL equivalents, which are significantly easier to handle when you don't control the database server. Previous work on using ActiveJob for trace processing makes the worker dyno setup possible, and moving the quad_tile code to an external gem made this part of the installation seamless.

Did I mention the price?

So how much does deploying your own copy of the OpenStreetMap website on Heroku cost? Nothing. Zero dollars per month. Heroku gives you 1 web and 1 worker dyno for free on each application that you deploy, and that's all we need. It also offers a free database tier too, which is enough to get started. Of course these have limitations, and you'll need to pay for some S3 storage if you want user images (and traces, in the future). But I think it's worth pointing out that you can spin up your own deployment without having to pay, and dive into testing those new features or creating your own OSM data playground. I'm sure that'll be useful to many people.

This post was posted on 6 November 2019 and tagged 12factor, development, heroku, OpenStreetMapSmoother API upgrades for OpenStreetMap

Recently, my coding attention has drifted towards the OpenStreetMap API. The venerable version 0.6 API celebrated its 10th birthday earlier this year, so it's had a good run - clocking up double the age of all previous versions combined!

Years ago, whenever we wanted to change the API, we gathered all the OSM developers in one room for a hack weekend, and discussed and coded the changes required there and then. For the most recent change, from 0.5 to 0.6, we had to do some database processing in order to create changesets, and so we simply switched off the API for a long weekend. Nobody could do any mapping. And everyone had to upgrade all their software that same weekend, since anything that used the 0.5 API simply stopped working.

In short, I don't think we can use this approach next time! Moreover, I think this 'big bang' approach to making API changes is actually the main reason that we've stuck with API version 0.6 for so long. Sure, it works, and it's clearly 'good enough'. But it can be better. Yet with such a high barrier to making any changes, it's not surprising that we've got ourselves a little bit stuck on this version.

So I've started work on refactoring the codebase so that we can support multiple API versions at the same time. There's a bunch of backwards-incompatible changes that we want to make to the API, but since those are not fundamentally changing the concepts of OSM, it makes sense to run version 0.6 and 0.7 at the same time. This gives us a smooth transition period, and allows all the applications and tools that use the current API version a chance to upgrade in their own time.

SotM 2019 was in Heidelberg last month, and among other things gave me a chance to talk face-to-face with lead developers from four different OpenStreetMap editors. The editors are the key pieces of software that use the API, so this was a rare chance to check in and find out what changes they would like to see. I spoke with developers of Vespucci, iD, JOSM - and of course Level0! My task now is to figure out which of their requests and suggestions are blocked by having to break API compatibility, or which ones can be implemented in the current API version.

One example of a breaking change is moving to structured error responses, a topic Simon Poole raised with me straight away. Often the API returns a human-readable error message when there's a problem. For example, during a changeset upload, the API could return "Precondition failed: Way 1234 is still used by relations 45,3557,357537". This isn't particularly handy, since editors then need a bunch of regular expressions to try to match each error message, and to carefully parse the element ids out of those human readable sentences. Changing these into structured responses would be more useful for developers, but that requires a version increment.

Another change that I am particularly keen to see, but perhaps not so keen to code, is to make diff uploads more robust. They currently suffer from two main problems. The first is that the diff can be large and therefore complex to parse and apply to the database, and so it can take a long time to receive a response from the API. For mobile editors, in particular, it can be hard to maintain an open connection to the server for long enough to hear back whether it was successfully applied to the database. So I'd like to move to a send-and-poll model, where the API immediately acknowledges receipt of the diff upload, and gives the client a URL that it can poll to check on progress and to receive the results. That way if there's a hiccup on a cellular connection, the editor can just poll again on a fresh connection.

Relatedly, the second problem is what the editor should do if it never hears back from the server after sending a diff upload. If the mapper has added a hundred new buildings, presses save, and the editor gets no response from the API, should the software try again? If it doesn't, then the mapper looses all their work. If it tries the upload again (and again?), it could be creating duplicate map data each time. It's impossible to know what happened - did the request get lost on the way to the server, or did the server receive it, save it, and it was the response that got lost? My proposal is to include an idempotency key in the diff upload. The server will store these, and if it sees the same upload key for a second (or third) time, it will realise the response has been lost somewhere, and no harm is done. A similar approach can then be taken with other uploads, like creating notes or adding comments, to allow safe retries for those too.

There are many other upgrades that I'd like to make to the API, focussed on a broader strategy of making life easier for editor developers. But without the ability to run multiple versions in parallel, none of these changes are likely to happen. So that's my first priority.

This post was posted on 17 October 2019 and tagged development, OpenStreetMapBetter Trace Uploads, New API Call and More i18n for OpenStreetMap

June was a busy month for my ongoing development work on OpenStreetMap.

The highlight of the month for me was that we wrapped up the project to move GPX upload processing to the built-in job queue system. This means that we can run the GPX processing jobs in parallel, and spread the tasks between different machines instead of than being limited to one process on one machine. This makes it much less likely that your uploaded trace will get stuck at the back of a queue.

It also means that the notification emails that you receive will be translated into your preferred language; it makes it much easier to test the processing or add new features; and we can get rid of some old code that nobody was looking after.

The key piece of work to get this released was being able to create the trace animations from within our ruby code. mmd-osm worked with the developer of the gd2-ffij rubygem to add support for animated gifs. That was the last piece of the puzzle, and when a new version of the gem was released, we were good to go.

Towards the end of the month I developed a new api/versions API call. This allows applications an easier way to find out what versions of the API the server supports, rather than using the unversioned api/capabilities call (the api/0.6/capabilities call is what applications should use for checking capabilities, since the capabilities could change between different API versions in future). It seems almost completely unimportant at the moment, since we’ve been on version 0.6 for over 10 years now, but it’s solving another piece of the jigsaw that will allow us to run version 0.7 (and perhaps future versions) alongside an existing API version, which we’ve never done before. There’ll be more work from me to support API 0.7 over the next few months.

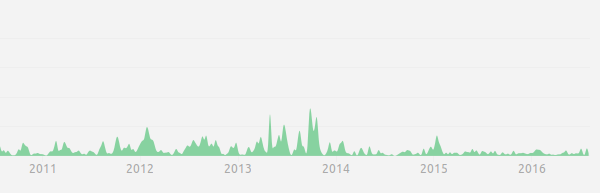

Internationalisation (i18n) is a topic that I keep working on, since we’ve got thousands of mappers who don’t speak English, and problems with the i18n system can be really jarring for them when using the site. One feature we use all over the place is describing things that occurred in the past, and previously it wasn’t possible to accurate translate times like “3 days ago” into all languages. I’ve now added a mechanism to make this possible, and it’s a solution that I might take upstream for wider use in other rails projects.

I also rolled up my sleeves and tackled some of the endless and thankless issue pruning! We still have over 450 open requests between two different issue trackers. Some of the issues are important, but are lost in the pile. But many are feature requests that are either quite niche, or ideas that seem reasonable but that realistically we aren’t likely to ever get around to doing. I find these the hardest to deal with, since it’s hard to close them without being unwelcoming. Yet the variety of small features that could theoretically be added is endless, and the project doesn’t gain anything from having an endless list of feature requests in the queue. So I try to deal with them as politely as I can.

Finally, some more refactoring. This time some minor changes, including the way we create forms and render partials. It doesn’t make the website run faster, but it’s less code for developers to read, and means that new developers are likely to see better existing code and make new features more easily. I try to keep creating a better and more enjoyable ‘developer experience’, particularly for new developers who might not be familiar with the tools, in the hope we can attract and retain some more developers.

That’s all for now!

This post was posted on 3 July 2019 and tagged OpenStreetMapModeration, Authorisation and Background Task Improvements for OpenStreetMap

We’re making steady progress with improving the OpenStreetMap codebase. Although it’s been a busy year for me, I managed to wrap up the moderation pull request, and it was merged and deployed in mid June. Lots of different people have worked on this over the years, starting way back as a GSoC project in 2015! It was great to finally get it integrated into the site.

Last week mavl, one of our site moderators, posted that nearly a thousand issues were logged by OSM volunteers in the first 3 months - at an average rate of over 10 per day! That’s a lot more than I was expecting, so future development ideas could involve working with the OpenStreetMap moderators to support their workflows.

Also in June, the Ruby for Good team picked us as one of their projects for their annual development event. A bunch of good stuff came out of that, in particular a new “quad_tile” gem, which builds one of our C extensions automatically during gem installation, and therefore saves new developers from having to deal with that.

In addition, they also kick-started work on our new authorisation framework, based on CanCanCan, which I outlined previously. It’s taken longer than I would have liked to get that ready, but the code was merged and deployed last week, and I’ve started refactoring the rest of our controllers to use it. This will allow us to remove a lot of home-grown authorisation code and use a standard approach instead, which means less custom code to maintain as well as being more familiar to new developers. I see this authorisation framework as a key enabler for future projects - we’ll be able to develop new features faster, while making sure that only the right people have access.

Another recent upgrade has been to set up and start using the Active Job framework. This allows tasks to be run in the background, and is now a standard part of Ruby on Rails. We’re already using it for sending notification emails, so now if you post a comment to a popular diary entry, you don’t need to wait while the system sends dozens of notification emails to all the subscribers - they are queued and dealt with separately. A small improvement, perhaps, but the job framework will really come alive when we start using it for processing GPX trace uploads. I hope to have more news on that soon.

If you want to follow our progress more closely, or get involved in the development, head over to our GitHub repository for full installation and development guides, along with all the issues and pull requests that we are dealing with!

This post was posted on 9 November 2018 and tagged active job, authorisation, development, moderation, OpenStreetMap, rubygemsGroundwork for new features in OpenStreetMap

Last summer I finished a large refactoring, and thought it would be nice to change tack, and so I decided to try to push through a new feature as my next project. Refactoring is worthwhile, but it has a long-term pay-off. On the other hand, new features show progress to a wider audience, and so new features are another avenue to getting people interested and involved in development.

I picked an old Google Summer of Code project that hadn’t really been wrapped up, and immediately spotted a bunch of changes that would be needed to make it easier for others to help review it. Long story short, it needed a lot more work than anyone realised and it’s taken me a few months to get it ready. I’ve learned a few lessons about GSoC projects along the way, but that’s a story for another time.

I want to keep going with the refactoring, since a better codebase leads to happier developers and eventually to better features. But it’s worthwhile having some sort of a goal, otherwise it’s hard to decide what’s important to refactor, and to avoid getting lost in the weeds. There have been discussions in the past about adding some form of Groups to OpenStreetMap, and it’s a topic that keeps on coming up. But I know that if anyone tried implementing Groups on top of our current codebase, it would be impossible to maintain, and it’s far too big a challenge for a self-contained project like GSoC.

So what things do I think would make it easier to implement Groups? The most obvious piece of groundwork is a proper authorisation framework. Without that, deciding who can view messages in or add members to each group would be gnarly. I also don’t want to add many more new features to the site with our “default allow” permissions - it’s too easy to get that wrong, particularly adding something substantial and complex like Groups.

I had a stab at adding the authorisation framework a few weeks ago, but quickly realised some more groundwork would help. We can make life easier for defining the permissions if we use standard Rails resource routing in more places. However, that involves refactoring controller methods and renaming various files. That refactoring becomes easier if we use standard Rails link helpers, and if we use shortened internationalization lookups.

So there’s some more groundwork to do before the groundwork before the groundwork…

This post was posted on 11 April 2018 and tagged development, OpenStreetMap, rails, refactoringFactory Refactoring - Done!

After over 50 Pull Requests spread over the last 9 months, I’ve finally finished refactoring the openstreetmap-website test suite to use factories instead of fixtures. Time for a celebration!

As I’ve discussed in previous posts, the openstreetmap-website codebase powers the main OpenStreetMap website and the map editing API. The test suite has traditionally only used fixtures, where all test data was preloaded into the database and the same data used for every test. One drawback of this approach is that any change to the fixtures can have knock-on effects on other tests. For example, adding another diary entry to the fixtures could break a different test which expects a particular number of diary entries to be found by a search query. There are also more subtle problems, including the lack of clear intent in the tests. When you read a test that asserts that a given Node or Way is found, it was often not clear which attributes of the fixture were important - perhaps that Node belonged to a particular Changeset, or had a particular timestamp, or was in a particular location, or a mixture of other attributes. Figuring out these hidden intents for each test was often a major source of the refactoring effort - and there’s 1080 tests in the suite, with more than 325,000 total assertions!

Changing to factories has made the tests independent of each other. Now every test starts with a blank database, and only the objects that are needed are created, using the factories. This means that tests can more easily create a particular database record for the test at hand, without interfering with other tests. The intent of the test is often clearer too, since creating objects from factories happens in the test itself and are therefore explicit about what attributes are the important ones.

An example of the benefits of factories was when I fixed a bug around encoding of diary entry titles in our RSS feeds. I easily created a diary entry with a specific and unusual title, without interfering with any other tests or having to create yet another fixture.

def test_rss_character_escaping

create(:diary_entry, :title => "<script>")

get :rss, :format => :rss

assert_match "<title><script></title>", response.body

end

All in all, this took much, much longer than I was expecting! Looking back, I feel I might have picked a different task, but I’m glad that it’s all done now. I’m certainly glad that I won’t have to do it all again! Moving on, it’s now easier for me and the other developers to write robust tests, and this will help us implement new features more quickly.

Big thanks go to Tom Hughes for reviewing all 51 individual pull requests! Thanks also to everyone who lent me their encouragement.

So the big question is - what will I work on next?

This post was posted on 1 June 2017 and tagged development, OpenStreetMap, rails, refactoring, testsSteady progress on the OpenStreetMap Website

Time for a short status update on my work on the openstreetmap-website codebase. It’s been a few months since I started refactoring the tests and the work rumbles on. A few of my recent coding opportunities have been taken up with other projects, including the blogs aggregator, the 2017 budget for the OSMF Operations Working Group (OWG), and the new OWG website.

With the fixtures refactoring I’ve already tackled the low-hanging fruit. So now I’m forced to tackle the big one - converting the Users fixtures. The User model is unsurprisingly used in most tests for the website, so the conversion is quite time-consuming and I’ve had to break this down into multiple stages. However, when this bit of the work is complete most future Pull Requests on other topics can be submitted without having to use any fixtures at all. The nodes/ways/relations tests will then be the main thing remaining for conversion, but since the code that deals with those changes infrequently, it’s best to work on the User factories first.

As I’ve been working on replacing the fixtures, I’ve come across a bunch of other things I want to change. But before tackling all that I’m going to mix it around a bit. My goal is to alternate between the work I think is the most important, and also helping other developers with their own work. We have around 40 outstanding pull requests and some need a hand to complete. There are plenty of straightforward coding fixes among the 250 open issues that I can work on too. I hope that if more of the issues and particularly the pull requests are completed, this will motivate some more people to get involved in development.

If you have any thoughts on what I should be prioritising - particularly if you’ve got an outstanding pull request of your own - then let me know in the comments!

This post was posted on 21 February 2017 and tagged development, OpenStreetMap, rails, refactoringUpgrading the OpenStreetMap Blogs Aggregator

One of my projects over the winter has been upgrading the blogs.openstreetmap.org feed aggregator. This site collects OpenStreetMap-themed posts from a large number of different blogs and shows them all in one place. The old version of the site was certainly showing its age. The software that powered it, called PlanetPlanet hasn't been updated for over 10 years, and can't cope with feeds served using https, so an increasing number of blogs were disappearing from the site. Time for an upgrade.

My larger goal was moving the administration of the site into the open, in order to get more people involved. Shaun has maintained the old system for many years and has done a great job. Unfortunately there were tasks that could only be done by him, such as adding new feeds, removing old feeds, and customising the site. To make any changes you had to know who to ask, and hope that Shaun had time to work on it for you. It was also unclear what criteria a blog feed had to meet to be added, and even more so, if and when a blog should be removed.

The challenge was therefore to move the configuration to a public repository, move the deployment to OSMF hardware, create a clear policy for managing the feeds, and thereby reduce the barriers to getting involved all round.

Thankfully, other people did almost all of the work! After I investigated the different feed aggregation software options - most of them are barely maintained nowadays - I reckoned that Pluto was the best choice. It turns out Shaun had previously come to the same conclusion, and had almost completed the migration himself. Then Tom put together the Chef deployment configuration, which was the second half of the work. So all I had to do was finish converting the list of feeds, make a few template changes, and everything was ready to go. After a few weeks delay while the sysadmins worked on other tasks, the new site went live on Friday.

If you know of a blog that should be added to the site, please check our new guidelines. If you have any changes you want to make to the site, the deployment, or the software that powers it, now is you chance to get involved!

This post was posted on 31 January 2017 and tagged development, OpenStreetMap, osmfRefreshing the OpenStreetMap Codebase

The codebase that powers OpenStreetMap is older than any other Rails project that I work on. The first commit was in July 2006, and even then, that was just a port of an existing pre-rails system (hence why you might still see it referred to as "The Rails Port")

It's a solid, well-tested and battle-hardened codebase. It's frequently updated too, particularly its dependencies. But if you know where to look, you can see its age. We have very few enduring contributors, with is surprising given its key position within the larger OpenStreetMap development community. So I've been taking a look to learn what I can do to help.

For someone just getting started with Rails, they'll find that many parts of our code don't match what's in any of the books they read, or any the guides online. More experienced developers will spot a lot of things that were written years ago, and would nowadays been done differently. And for some of our developers, particularly our Summer of Code students, they are learning what to do by reading our existing code, so our idiosyncrasies accumulate.

I started trying to fix a minor bug with the diary entries and a bunch of things struck me as needing a thorough refresh. I started the process of refactoring the tests to use factories instead of fixtures - 2 down, 37 to go. I've started rewriting the controllers to use the standard rails CRUD method names. And I've made a list of plenty of other things that I'd like to tackle, all of which will help lower the barrier for new (and experienced) developers who want to get stuck into the openstreetmap-website code.

I hope that progress will snowball - as it becomes easier to contribute, more people will join in, and in turn help make it even easier to contribute.

But it's time-consuming. I need help to share these projects around. If you're interested, please get stuck in!

This post was posted on 15 September 2016 and tagged development, OpenStreetMap, rails, refactoringsubscribe via RSS