Tag Archives: General

- We need a deselect button. When you have a feature selected it’s not obvious that to deselect you just click somewhere else on the map

- The wiki page on wheelchair mapping is unclear about tagging accessibility of toilets when they are in another amenity (e.g. pub) rather standalone toilets (amenity=toilets)

- One person triggered EntityUI exceptions when zooming in and out. I was surprised to see the exception showing - normally these only show on debug flash plugins

- Still confusion on how to add features that aren’t in the grid of icons (Current solution is to double-click to create a POI, suggestion is to have an “other” poi to drag/drop)

- The conflict dialog, which you see when two people edit the same road, isn’t particularly helpful. It only gives the id of the feature, which doesn’t help. There’s no method to reconcile the differences (or even see what they are). Yes/No labels on the buttons are bad.

- The backgrounds dialog needs better labels. e.g. “Bing Aerial Imagery” since “Bing” is meaningless

- Need to drag/drop “new point” (as above - shows you how often it came up!)

- Maybe need a “More…” button on the presets to provide some way to reassure people they aren’t definitive and show them how to figure things out

- Click-again (that is, clicking twice slowly) should also create a POI

- It’s hard to read the road names, especially when they are at an angle

- Duplicate nodes, when shown, aren’t easily figured out what they mean

- One person made the advanced tag panel go haywire by having multiple new tag entries - and managed it repeatedly

- Wiki documentation on bookmakers still sucks. We ran into this last year - there’s a lot of bookmakers in London, and especially if you know a different term for it (gambling shop etc) the documentation is hard to find

- Would be great to highlight mistakes, e.g. tagging building=yes on a node. This happened a couple of times when people had a node of an area selected when they started adding tags

- Copying tags from nodes to ways (see above)

- Newbies shouldn’t be exposed to the footway vs path controversy on the wiki.

- Nobody ever finds the search box on the wiki, especially when they are using browser-based find on the Map Features page.

- People accidentally mousewheel out too far repeatedly when editing. Maybe we should prevent it at low zooms

- barrier = entrance vs building = entrance is unclear

- Nobody reads past the first paragraph of the Key pages on the wiki before just skim-reading the read. Which means sentences like “Some people use the tag ‘foo = bar’ when they should instead use ‘baz = bar’ becomes “….. ‘foo = bar’ ….” and that gets used.

- The public transport pages on the wiki are dreadful, and newbies shouldn’t be exposed to two alternative tagging schemes. I have my own views on the whole new pointlessly-incompatible schema in any case.

- You can end up with both the rails_port search panel and potlatch 2 open at the same time. If you try closing the search panel you get the “leaving the page” warning, when you aren’t actually leaving the page.

- The “loading….” label isn’t obvious

- Areas of the map that haven’t yet had the data downloaded could be highlighted (or disabled) so that you don’t think it’s just empty.

- We need some way of saying “Zoom in!” when you have too much data showing at the given time and flash is crawling to a halt

- The data loading could be improved by having a tile-based map call instead of the current wms-like map call.

- Voids - SRTM has "no data" gaps in certain places of the world, where the radar reflections went haywire. These happen in marshes (not of interest) and mountains (of great interest!), especially over the Alps. ASTER is void-filled already, so the clever-but-inaccurate void-filling I use wouldn't be necessary

- Resolution - It's great that SRTM covers the whole world, but I'd love to see it at a higher resolution. ASTER's nominal resolution is three times greater than SRTM, so it's very attractive.

- Arctic coverage - SRTM only goes as far north as 60°N, which is a bit of a problem in Scandanavia. Although there's GTOPO30 data for these areas, that's got a horizontal resolution measured in kilometres, so not exactly great for me. ASTER covers those areas too, up to 83°N.

TileMill, Carto and the Transport Map

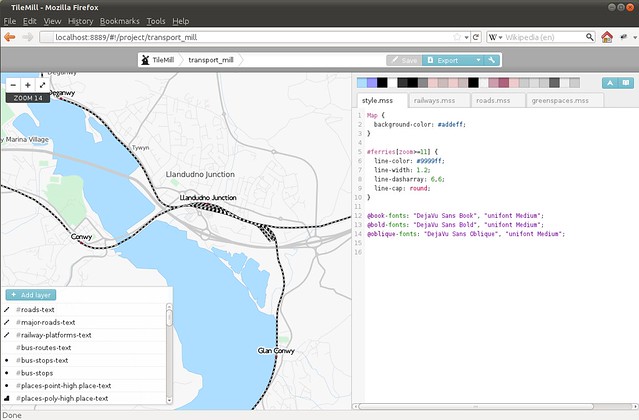

A few months ago I started exploring some new technologies from DevelopmentSeed - namely Carto and TileMill. Carto is a CSS-style map description language, similar to Cascadenik, and TileMill is a browser-based application that lets you view maps while you're designing them.

Initially my efforts were a complete flop - at that point neither Carto nor TileMill had any support for storing the map data in PostGIS, which is a key component of making maps from OSM data. A month later and support was added, so I got cracking - mainly bashing my head against the weirdness of the node package management system NPM. But after a lot of effort and only a little swearing, I got it all working. It's totally worth it.

Designing maps is hard - both in the amount of complexity in the style rules (there's lots of colours, widths and so on) and also in the data - every town is different, and the way a map looks varies wildly between town and countryside. So a key thing is to be able to iterate quickly, to make changes and to see how things look in different areas. My previous toolchain - effectively just a modified version of generate_image.py - was a complete pain. To check different areas I'd need to find out the coordinates, pick a zoom level, wait for the image, check it, rinse, lather and repeat. The power of having live-updating maps in TileMill is not to be underestimated!

My first map style produced with Carto and TileMill was the Transport layer. I had originally created the Transport layer using Cascadenik - similar to Carto, it's a CSS-inspired approach to describing map styling, and much easier than writing individual symbolizers in XML. Carto takes the idea another step forward with sophisticated rules nesting, which I've been using more and more in recent months. Since porting the Transport layer, I've ported all my other styles to Carto, but more on that some other time. If you're still creating mapnik style rules by editing XML, I'd advise you check out Carto instead!

This post was posted on 8 February 2012 and tagged GeneralOpenStreetMap usability revisited

At the end of September I took a half-day off from the day job and visited UCL. They were again running an Introduction to OpenStreetMap Mapping Workshop for their new masters students. I went along last year and created some great notes on usability for OSM newbies and did the same again this year. It’s rare for me to be able to watch (and help) so many newbies at the same time.

The main difference between last year and this have been the move to Potlatch 2 as the main editor, so I was especially looking forward to seeing how this performed. Also the students were this year focussing on wheelchair accessibility mapping, which had implications mainly for the detail of our presets compared to this highly-detailed (and relatively unusual) mapping focus.

So here’s the list of notes that I made, in the order that I made them

Some of these things are familiar from previous user testing, some are new, and some will need a bit of discussion to tackle. This is a good opportunity to plug the upcoming Hack Weekend!

Thanks to Dr Patrick Weber for inviting me along.

This post was posted on 25 October 2011 and tagged GeneralDealing with GDAL and Mapnik

Getting GDAL and Mapnik to play nice is a complete pain. Now that I've managed it, I'll give you the solution and explain some of the background.

Mapnik has two plugins for reading image files and using them as background in maps. For OpenCycleMap I currently use the "raster" plugin which reads the files directly, and I need to calculate and supply mapnik with all the coordinates for each image. It's a bit tedious, but when we set up OpenCycleMap a few years ago it was the only way we could get things to work.

Time moves on, and for new projects (and the massive forthcoming OpenCycleMap upgrade) I'm using the "gdal" plugin. This uses the wonderful (but sometimes infuriating) GDAL libraries to read the images and use any geo-information that's embedded within them. Saves a lot of hassle, and when you're dealing with tens of thousands of raster images then things like .vrt files are a godsend.

However, gdal has a secret lurking deep within its sourcecode, and it's all to do with libtiff. libtiff is the library for reading .tif files, which are normally limited to 4Gb in size. There's a new version of libtiff that deals with giant tiff files that are greater than 4Gb (known as BigTIFF). The version of libtiff that comes with Ubuntu doesn't have BigTIFF support, so the GDAL packages use their own internal copy of the library. With version 0.8.0 of gdal, a feature was added to throw an error if multiple versions of gdal were found active at the same time (in 0.8.1 this was downgraded to a warning). But for most applications using gdal there's no big problem - they use the gdal libraries, and hence use the BigTIFF version of libtiff. Meanwhile the standard libtiff (which loads of other things need - trust me, don't try uninstalling it) is left out of the picture and usused.

The problem is if your application - say, mapnik - compiles against both the system libtiff and gdal-with-BigTiff. If you're using gdal before 0.8.0 then you might get silent corruption of the output, if you're using 0.8.0 the mapnik will keep crashing with "ERROR 1: WARNING ! libtiff version mismatch : You're linking against libtiff 3.X but GDAL has been compiled against libtiff >= 4.0.0"

The trick to this is to avoid using any ubuntu packages of gdal - whether from the ubuntugis PPA repositories or anywhere else - until someone somewhere sorts it all out (probably in some future Ubuntu libtiff will have BigTiff support built-in). In the meantime, grab yourself gdal from source (0.8.0 is fine, btw) and configure it with

./configure --with-libtiff=/usr/lib

This forces gdal to use the system libtiff, and prevents any corruptions or segfaults in applications (like mapnik, which you'll need to recompile too). It means you don't get BigTiff support, but hey-ho. But most importantly, you can stop spending all your life juggling gdal versions trying to find which particular combination of packages and PPAs work (hint: none of them do). Thanks to Dane for the final clue - I've spent days of my life repeatedly battling this!

This post was posted on 9 August 2011 and tagged GeneralTransport Map

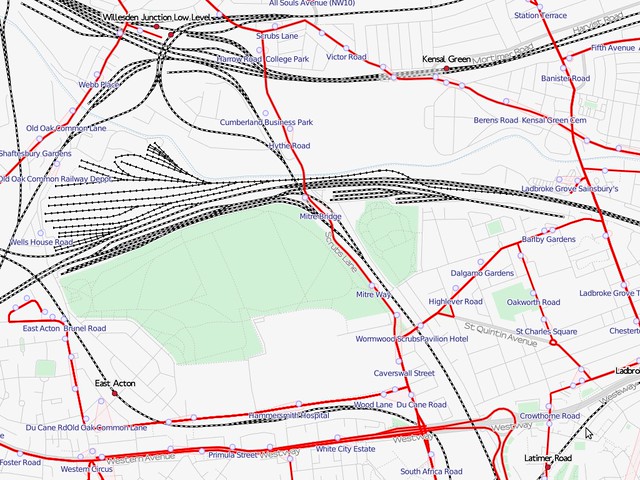

I recently added a new Transport layer to OpenCycleMap, which some of you will have spotted, and I hope you find interesting. The eagle-eyed among you may even have spotted it as one of the maps on Grant's curious OpenWhateverMap!

I first visualised bus routes in 2008, and ran a few experiments on railways as part of an experiment in terrain maps just over a year ago. In the mean time I've had these ideas on the back burner while I focussed on OpenCycleMap, but recently made some space to put them together into a fully-fledged project. I'm not the first to make a transport map, with öpvnkarte being a famous but no-longer-updated example, but the cartography is something personal that I have my own take on and I enjoy the challenge of creating special-interest maps. So while taking a break from terrain-data processing I put the transport map together. There are certain features of the map that are drawn directly from OpenCycleMap, and there are new developments that I will eventually re-incorporate too.

One of the phrases I started using a few years ago is "render and they will map" - or, in other words, if you are interested in a particular aspect of mapping data being improved then the best way to encourage mappers to improve that is to make it visible and useful. Certainly after I started rendering cycle routes their number in OpenStreetMap increased dramatically, and similarly for the other specialist things in OpenCycleMap. I'm hoping that my world-wide transport layer will encourage similar things in the area of transport data such as adding greater detail to railway stations. In the UK we have patchy levels of detail in bus stops and bus routes; even in London many bus stops have obvious errors in their names. I suspect since they aren't shown on the current mainstream maps nobody is noticing (and hence fixing) the problems, but, over time, the data should mature and the transport map will therefore improve too.

Another aspect of the OSM data is the high level of detail in the data, which can make some mid-range zoom levels incoherent. I've tackled these in two different ways - for example, railway yards and sidings can be distracting when looking at inter-city rail corridors, but the transport map checks for the appropriate tags to hide them where possible. However, the tags aren't widely used at the moment since they aren't rendered on other maps, but in this way my map will improve over time as the mapping volunteers add ever greater details. In contrast, I've used the station buildings to obscure some of the track details at mid-zoom levels, and gone one step further in simplifying the building geometries at the same time - but losing some of the complex detail of OSM can give better cartographic results. I've got further examples and some experiments lined up, and if my talk is accepted I'll be discussing these at State of the Map Europe later in the year.

For now I'm working on speeding up the rendering - this is the first full-blown map I've made with Cascadenik and the performance is surprisingly poor. I'll be trying to nail down what's causing this and share that with you soon. In the meantime I continue to work on the cartography and I'm interested in your feedback and questions.

This post was posted on 11 April 2011 and tagged GeneralThe London Streets Challenge

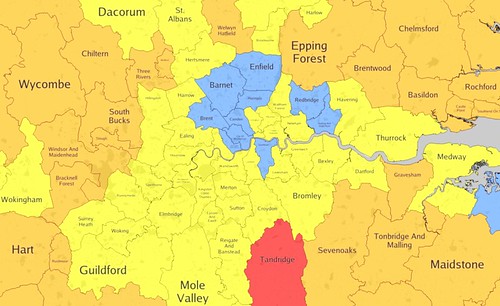

I've been mildly obsessed with the street-name comparison tool from ITO World over the last couple of weeks. It measures how many correctly named roads OpenStreetMap has in the UK by comparing it against the Ordnance Survey's OS Locator dataset. We also get to mark where corrections are needed in the OS data - even they aren't perfect!

According to the combination of the OS data combined with our list of corrections, OpenStreetMap (and hence OpenCycleMap) today has 600,414 correctly named streets - just over 70% of the total. What's more, in the last week alone we added 4,699 more streets - in the last 31 days we've added a whopping 19,309. If we keep this up we'll be done in about 13 months, but I hope we can up the rate even further.

One particularly eye-catching part of the ITO World analysis is the map showing where we have good and bad coverage (unsurprisingly, good in the cities, bad in rural areas - we kinda knew that already). Areas are coloured red through yellow and finally turn blue at the 95% completion level - which throws up a couple of surprises like Orkney and Shetland being blue and Tandridge on the outskirts of London still being red. That lead me to ponder a challenge for our London Pub Meetups:

Every time a London area gets beyond 95% - i.e. turns blue on the map - we'll have a celebratory pub meetup in that area.

We discussed this at the last pub meetup (although Harry is being a bit harsh if we're going to wait for 100%, I reckon 95% is close enough for now!). Not counting those that are over 95% already, we've had our first winner - Barking and Dagenham! Congratulations to all involved, and I invite someone to step forward and nominate a nice pub for the rest of us to come and visit.

If Barking seems like a bit of a trek for you to get to, then that's the motivation to improve areas closer to home!

For the record, there were 9 areas already blue before I opened the challenge - Redbridge, City of Westminster, Camden, Brent, Southwark, Kensington and Chelsea, Barnet, Enfield and Haringey. Perhaps these areas can be opened to the 100% challenge instead!

This post was posted on 2 February 2011 and tagged GeneralNew OpenCycleMap tileserver

It's been about a month since I launched with the new OpenCycleMap tileserver. The transition was actually much more hectic than I expected (I'll describe the EC2 failure some other time) but things have stabilised a bit since and are humming along nicely.

So what's new? Almost everything, actually, although mostly things you don't get to see. New hosting, for a start, on a dedicated server with both huge SATA disks (mirrored 1.5Tb) and also a pair of blindingly fast 128Gb striped SSD drives. The postgis database is on the fast disks, everything else being on the SATAs. The speed of the SSD drives really comes into its own while rebuilding the indexes during hourly updates, rather than being directly related to rendering.

I've also upgraded all the software behind the scenes - new versions of mod_tile (with much better monitoring), renderd, mapnik, osm2pgsl. The biggest change that you'll all notice is the tiles are now much smaller - around a third of the size - due to using palletised .png files. The new version of mapnik gives me the raster-handling needed to keep the background colouring at all zoom levels, which is a huge improvement. The biggest change I notice is that we're now using osm2pgsql in slim mode - allowing incremental updates to the database instead of weekly drop/reload - and all of the fancy route-relation handling is now built into osm2pgsql instead of being post-reload SQL queries. It's all much easier to deal with.

The whole upgrade project was delayed somewhat by the initial new server not working out. I'd specced out what I though was an appropriate machine, and everything worked fine - loading the database, testing rendering, the works. Unfortunately the diff updates were, on average, taking around "equal time" - an hour and a bit for an hours-worth of OSM changes, about 20 hours for a days worth of changes - and this with the machine doing nothing else! Disaster. So I scrambled to find a new provider with better hardware (at a reasonable price) and started again with the setup. Hat tip to Hetzner.de for their server offerings, and of course my ex-employers CloudMade for their continued sponsorship of the hardware.

Finally, I managed to sneak in some cartography changes while I was waiting for various things to complete (importing contours for the whole planet takes a few days!). Most of them were fairly subtle, and a fair few have gone unnoticed. Buildings are my new favourite, along with finally making new shield icons to replace the (ugh) nasty old ones (there's a story behind them, as with most things). Can you spot any of the dozen other changes?

What's next? I'd like to say more cartography updates, since there's lots more I'd like to change. But cartography always seems to take a backseat to server administration, and that's, as usual, starting to cause headaches. The huge numbers of people using the opencyclemap tileserver means that there's many millions of tiles to render, and the server is only - just about - keeping up with refreshing them as the underlying data changes. Something needs to be rethought about this, and I'm hoping to find some speed improvements from somewhere to render the tiles faster and let the server keep its head above water. The server troubles are massively exacerbated by thoughtless people out there (abetted by thoughtless software developers) who are bulk-downloading, or scraping, the tileserver for tens of thousands of tiles at a time - approximately none of which they ever view. Plans are afoot to deal with them though!

This post was posted on 8 September 2010 and tagged GeneralASTER - Not worth it yet

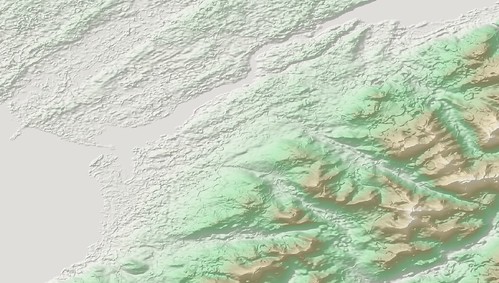

A few months ago NASA caused a stir by releasing a new global height dataset called ASTER. I use an earlier dataset (SRTM) for OpenCycleMap, which has a few problems that ASTER, at least initially, promised to solve. The three of primary interest to me are:

So far so good. But when I started work with ASTER in December, things spiralled rapidly downhill. First is the pointlessly irritating "order a dataset" website, that sucked up hours of going round in circles. It's like a shopping website from 1999. You need to use a stupid interface to order which 1°x1° tiles you want, and "All" isn't an option, despite there being 22,600 of them. It seems geared up for people who want a couple of dozen at a time, and the whole thing has a feel of being run by men with beards and sandals who'd rather you didn't use their website in anything newer than Netscape 4 on HP-UX.

When I read the README alarm bells started ringing. There's a section on "mole runs" and "pit artefacts" that sounded a bit worrying, but I wasn't sure how much of an issue they'd be - if they were small and few and far between then that's not much of a problem. But the biggest thing that caught my eye, buried on page six after pages of confirmation of how good the accuracy was, right at the end of a section as a throwaway comment, was the following statement:

Also, while the elevation postings in the ASTER GDEM are at 1 arc-second, or approximately 30 m, the detail of topographic expression resolvable in the ASTER GDEM appears to be between 100 m and 120 m.

That's a bit of a bomb-shell - it's saying that although it's got a much higher nominal resolution than SRTM, it's effective resolution is about the same - there's not any more actual detail, just more pixels. That was almost enough to make me give up there and then, but it's still void filled and covering more area of the planet, which would be good improvements. So I grabbed the DEM (thankfully, they're straightforward GeoTIFFs) and got to work over Snowdon. I did some colouring and contours, and they both looked excellent and much better than what I made from SRTM. But then I tried hill-shading, and disaster!

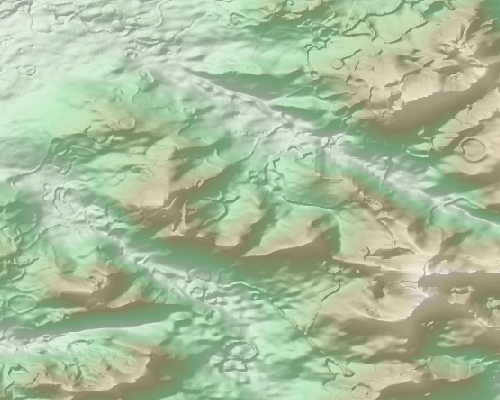

Here's the area around Snowdon (click through for original size):

and a detail of Snowdon itself:

The mole runs are everywhere - all across that image, even on the flat bits. And the pit artefacts are huge - the size of quarries, and really, really obvious. I honestly can't use that - maybe for a "what the world would look like if it looked like the moon" project, but nothing more serious than that. And considering that SRTM has only a handful of single-pixel voids in that area, the guys making ASTER have made something that's substantially worse than an oversampled SRTM. And considering they were even using SRTM to fill the gaps in the ASTER data, that's a pretty poor show. I started reading around and found a few people saying similar things. And when I though about it, the "improvements" to the contours I saw could be recreated with SRTM by using gdalwarp to artificially increase the resolution (with some nice smoothing) before generating the contour lines. So I gain nothing from ASTER in the 95% of the planet that doesn't have significant voids, and in that same 95% it's not really usuable.

So for now, I've given up with ASTER. I might revisit it for the band between 60°N and 83°N, but it also says in the readme they have voids over eurasia for that area (so much for void-filled, eh?). And it would be interesting to see if someone can fill the large SRTM voids with ASTER (which sounds back to front, hey-ho), but I don't have time for that. However, as they say in the docs, all these artefacts are happening in the boundaries where they have different numbers of original samples, so maybe a future version will have these automatically smoothed out, and it they can figure out how to stop their 15m sampling getting turned into 120m effective resolution, that would be awesome. But for now I would say it's not worth using it.

This post was posted on 13 January 2010 and tagged Generalsubscribe via RSS